Cisco Webex Experience Management Invitations Module Architecture Document

Content Outline

1. Introduction

In an economy where customer experience trumps price and product, the Cisco Webex Experience Management (referred to as Experience Management here on in the document) platform helps organizations exceed customer expectations and deliver business outcomes through its three pillars of customer experience:

- Measuring the customer journey: Understanding the customer experience through a continuous collection of moments across their journey.

- Obtaining a 360-degree view of the customer: Bringing disparate data together to enable actionable insights, proactive support, and advanced marketing automation.

- Becoming predictive: Understanding predictively how changes to the customer experience will affect outcomes and impact financial metrics.

One of the key steps in measuring customer experience is to reach out to customers over various channels such as email, SMS, web intercept, etc to solicit feedback. Amongst all the survey distribution channels, email and SMS are 2 of the popular ones. Global delivery invitation management enables a personalized experience for receiving survey invitations across various channels on the journey using workflows that can be configured and reused across chosen transmission channels like SMS and Email while requiring no PII information in the Experience Management platform.

2. Key Benefits and Features

| Features | Benefits |

|---|---|

| Omni-channel survey invitation dispatch | Send invitations across multiple journey channels, giving end customers the choice of how they can interact with your business |

| Centralized delivery policy management via delivery templates | Manage organizational corporate communication policies for invitation dispatch such as time of day communication windows, channels for communication, and campaign workflow rules for follow-up messages. |

| Global data centers and data residency (PII and outbound delivery processed in sovereign territories) | Businesses operating in countries with government or industry regulations that require PII to be processed in the region can run a compliant solution. |

| Single instance hosted PII processing (no PII required by Experience Management, only hashed data needs to be transmitted) | Run a completely secure, zero PII solution with a AWS/Azure Cloud cloud instance that processes PII and dispatches invitations |

| Extensible architecture that supports ETL processing with serverless AWS Lambda or Azure functions for a scalable pipeline | Flexibility to customize and set up a big data processing pipeline with custom business logic based on the unique needs of your business |

| Content template upload and preview | Upload and test personalized content templates with easy substitutions that deliver improved response rates |

| Schedule-less delivery pipeline | Just like FedEx, tee up delivery batches. Based on your delivery policy, these get queued, and sent off in-flight. |

| Real-time notifications about bad data during ingress | Quickly take action to address bad data issues at the source system of your data pipeline. |

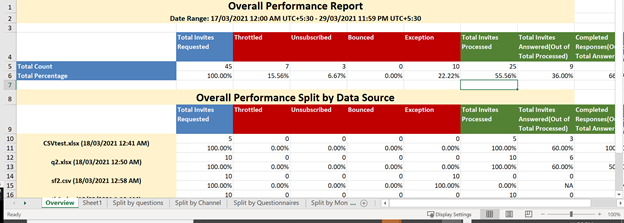

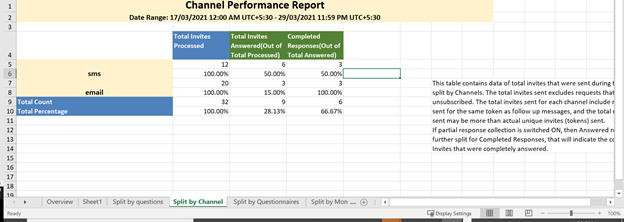

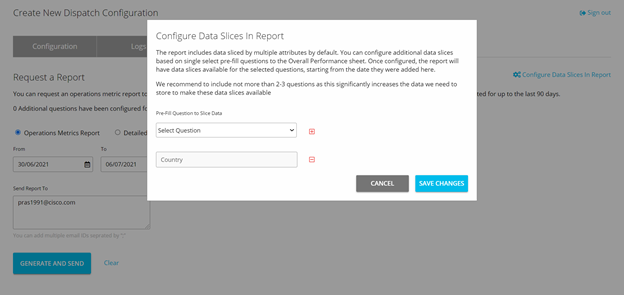

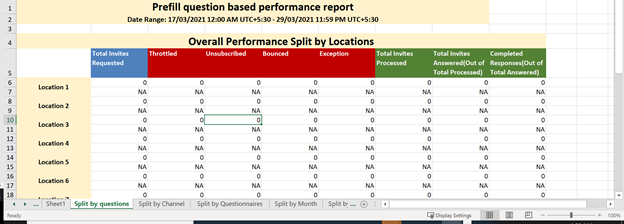

| EOD invitation downloadable reports with progress/success/failure status | Periodically review dispatch performance to continuously tune content templates, dispatch policies, and survey logic to optimize response rates. |

3. High-Level Workflow

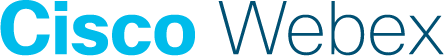

The following diagram shows at a high level how CCX/CCE/WCC consumes the Experience Management Invitations feature to send surveys to customers over emails and SMS.

The “Dispatch request API” becomes the entry point for Cisco contact centre products or 3rd party systems such as CRMs to consume the Experience Management Invitations feature. Various elements of the infrastructure provisioned in AWS/Azure cloud to host the “cloud hosted module” of the Invitations feature is covered in the above diagram.

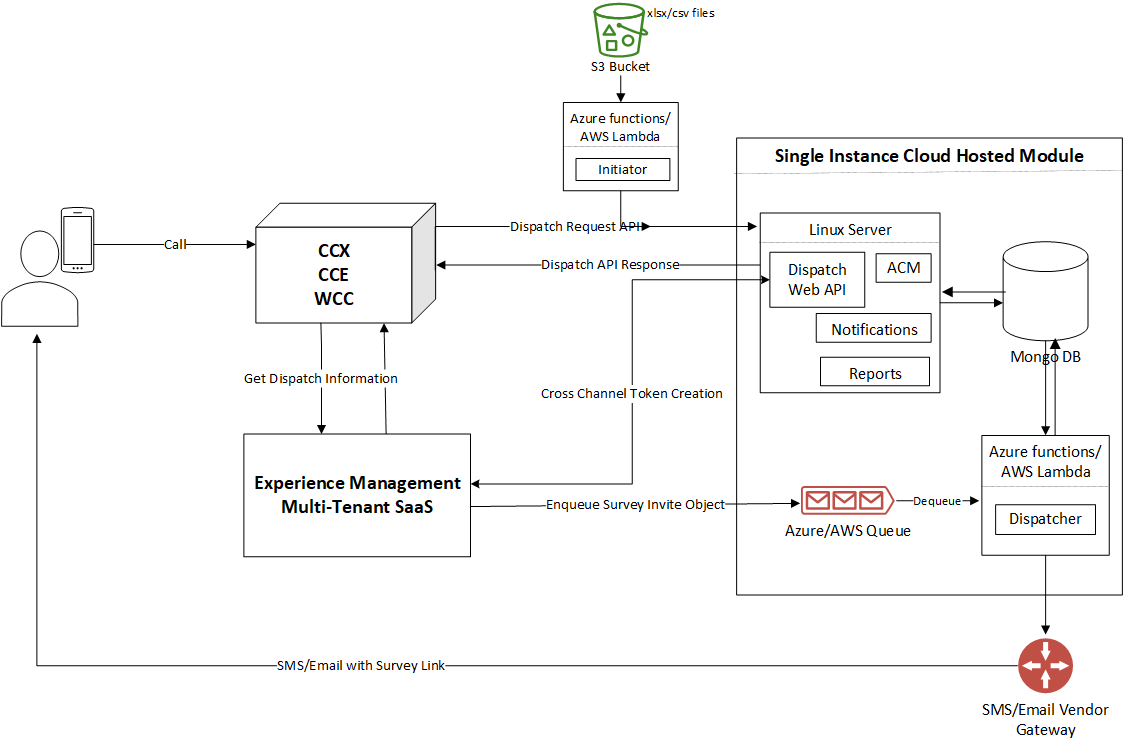

The following diagram zooms into and provides a high-level view of the workflow of the Invitations feature itself.

The Cloud hosted module of the Invitation solution would be a single-tenant AWS/Microsoft Azure cloud hosted module that would interface with the multi-tenant SaaS Experience Management. Everything on the left-hand side of the above diagram encapsulates the Cloud hosted module of the Invitations solution and everything on the right-hand side depicts the multi-tenant SaaS modules. Both modules work together to form the Experience Management Invitations feature.

The various components which are a part of the Cloud hosted module of the Invitations feature are is as given below:

- Dispatch request: This is the entry point into the Invitations module for any 3rd party system. A 3rd party system can make an API request to the invitations module to initiate sending emails and SMS

- Sampling: Customers may choose to send only a subset of all the records included in the batch request. This can be achieved by setting sampling rules either in Experience Management or can be extended using any custom logic

- Cross channel token creation: This component interfaces with Experience Management Delivery policy module to create Experience Management unique survey links that will be sent to end customers

- Database: This component holds all the data needed to be stored for the Invitations module to work

- Dispatcher: This component interfaces with 3rd party email and SMS vendors that help deliver the invitations to the recipients

- Inititaor: This component consumes a

.csvor.xlsxfile to create its equivalent Dispatch Request. This is an optional component for CCE/CCX/WCC customers. However this is a mandatory component for CWXM customers.

While the API can be deployed on a Linux server, the Dispatcher component is designed to run on serverless compute (AWS Lambdas or Azure Functions). Hence the cloud-hosted module has to be deployed on an AWS/Microsoft Azure cloud infra on either AWS or Microsoft Azure.

4. Detailed Architecture

Each component explained above is captured in more detail and covers the request/response patterns and data flow between various components and the database. In the upcoming sections, we will dive into more specifics of each component and how they function independently.

4.1 Dispatch Request

4.1.1 Dispatch Request Description

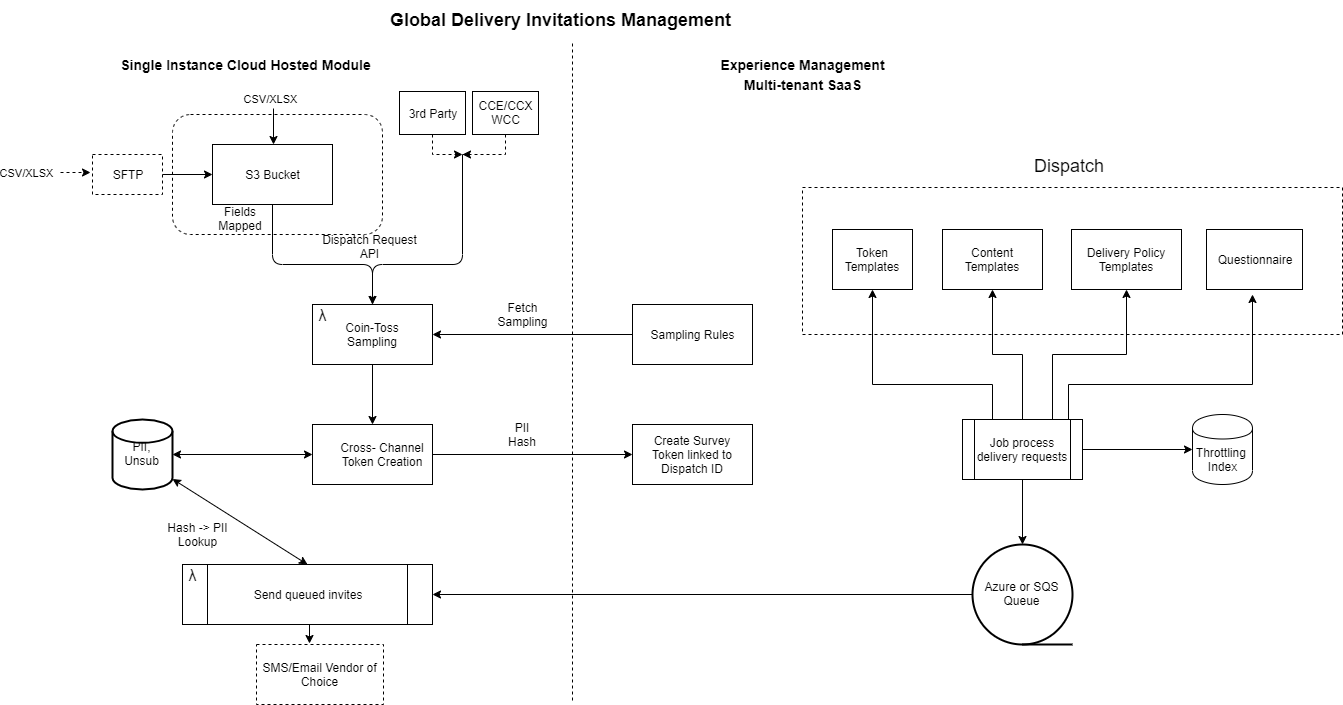

The above diagram depicts the detailed architecture diagram of the single-tenant Invitations module.

The Dispatch request API should be deployed and exposed to other Cisco products such as Cisco Contact Center Express or Cisco Contact Center Enterprise or even other 3rd party systems such as CRM systems, ERP systems, etc. These systems can make an API request with the details of all the records to be processed to send emails or SMS to end customers.

An overview of the sequence of events that happens within this component is as given below. This is basically how the component functions and no additional development activity is required to enable any of what is detailed below:

-

First checks if the request payload size is greater than the configurable parameter or the number of unique dispatches is more than the configurable parameter. If yes, then it rejects with PayloadTooLarge status code

-

Validates the bearer/basic token to confirm whether it is valid or not. All systems calling this API should send Bearer token. Also, validates if the user belongs to the same admin user family as stored in AccountConfiguration Collection

-

If valid token, the token is cached in MemoryCache for 15 mins. However, the time for caching is Configurable. Next time, if same token comes within 15 mins, just returns true. Also, caches ACM account config (from DB) which is also used to validate the token

-

If MemoryCache is empty (doesn’t have dispatch, DP, Questionnaire, Active questions, and Settings API), details will be fetched from Experience Management (over API calls) to proceed with validation process and these responses are cached for 1 hour. Subsequent requests during the next 1 hour will reuse the cached information

-

Checks if dispatch is valid or not. If invalid, returns 400 for that dispatch under 207 multi-status. Also, batches the records per dispatch here. After this step, if same dispatch are sent multiple times in same payload, it will be merged into one dispatch

-

If valid, runs against MongoDB AccountConfiguration collection to validate if dispatch is configured or not

-

If available, runs validations for mandatory fields vs channels, checks PII hashing and before hashing, converts the values to lowercase

-

In case of duplicate records which is identified by UUID parameter, all matching UUID value within the same dispatch of a request is rejected

-

Since we know the email ID/mobile number here, partner has the option to extend to support their own unsubscribe logic linking to client’s system

-

Creates an EventLog object and bulk API request messages

-

Pushes bulk API request object to in-memory concurrent queue to be batched and processed by another background scheduler

-

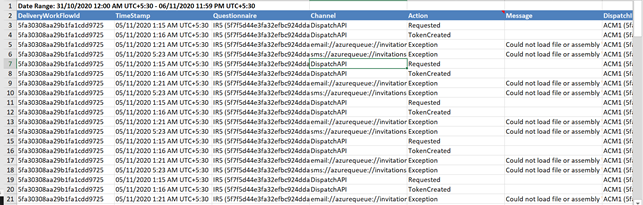

The EventLog records are inserted into the Mongo DB. There are two bulk inserts in the DB for the same records; one with the tag as “UserData” which is inserted per record and one with the tag as “DispatchAPI” which is inserted as a batch of DIWEF logs.

-

Returns status as per above architecture diagram

-

Any other exception will result in 500 with specific messages

-

Background Scheduler process – this runs every 2 mins in production systems. Flexibility to configure the timer to 1 min, 2 min, 5 min, 10 min is provided

-

The primary function of this process is to peak and dequeue from in-memory queue every 2mins which contains the bulk API requests. Batch the payloads for the same dispatch before making the API call

-

This is developed based on this implementation concept

-

Possible configurations of 1min, 2 min, 5 min, 10 are provided to avoid high CPU utilization while running with less frequent dispatches. The default is 2 min.

-

As soon as the scheduler starts, it checks for the data in the queue, if no data then waits for the next schedule. If data is available, dequeue for 30 seconds. If the data is dequeued earlier, it will move to further processing

-

While dequeuing, requests are batched per Dispatch ID

-

Since this is a separate process, an auth token is created and maintained in memory cache just for the bulk token creation API call. Before 5 mins of auth token expiry, it will be retrieved again.

-

Calls Experience Management Bulk token API sequentially. After every API call, waits for 1 second before making another call

-

Once the tokens are created and returned in a bulk response, parse through each record, batch find and update the Mongo DB EventLog collection. So now each successful record will have a token attached to it.

-

Any exception or API failure will be logged in DB as EventLog record.

-

In case of any exception or failure, token creation API will not be tried again.

-

4.1.2 API Details

-

Prerequisites to access APIs

- Experience Management account which has different Dispatch IDs configured. Dispatch ID along with prefills should be passed in this API. Here is a link to the Invitations configuration guide

- Experience Management Bearer token should be passed in the request header for authorization

- Deployment of Web API to get the Base URL

-

Method: POST

-

API URL: Base URL + /api/dispatchRequest

-

Authorization:

- To access this API, bearer token should be retrieved using Experience Management LoginToken API and should be passed under “Authorization” header as bearer token

-

API request:

-

For “prefill”, please pass the Question ID of the prefill question created in Experience Management and pass along with the actual value

-

dispatchID - generated by Experience Management admin for a Experience Management account, which is a combination of Delivery policy, Content template and token template

-

Mandatory prefills - Based on channel configured for Delivery Policy in Experience Management, request body should contain value for appropriate prefills

-

Email Channel - Required tag to look up in questions response to fetch questionID is “Email”. Pass the email ID under this prefill

-

SMS Channel - Required tag to look up in questions response to fetch questionID is “Mobile”. Pass the mobile number under this prefill

-

API request structure

[ { "preFill": [ [ { "questionId": "AgentIDQuestionID", "input": "Agent01" }, { "questionId": "CustomerNameQuestionID", "input": "John Doe" }, { "questionId": "CustomerMobileQuestionID", "input": "888888" }, { "questionId": "CustomerEmailQuestionID", "input": "1@1.com" }, { "questionId": "CustomerIDQuestionID", "input": "CUST01" }, { "questionId": "AgentEmailQuestionID", "input": "a01@a.com" } ], [ { "questionId": "AgentIDQuestionID", "input": "Agent02" }, { "questionId": "CustomerNameQuestionID", "input": "John" }, { "questionId": "CustomerMobileQuestionID", "input": "999999" }, { "questionId": "CustomerEmailQuestionID", "input": "2@2.com" }, { "questionId": "CustomerIDQuestionID", "input": "CUST02" }, { "questionId": "AgentEmailQuestionID", "input": "a02@a.com" } ] ], "dispatchID": "dp123" } ]- Multi-language support

- Language parameter can be passed as one of the Experience Management prefills in the Batch Request API. This prefill will override the default language settings and Content templates will be chosen based on this. Question tag to prefill is “cc_language”. and answer to be prefill is, ISO 639-1 codes.

-

API Response:

{ "batchId": "Id to track back the upload, every token will have this field to track", "statusByDispatch": [ { "dispatchId": "12ac23", "message": "", "dispatchStatus": 202 }, { "dispatchId": "12sadfd", "message": "", "dispatchStatus": 206 } ] } -

Here, batchId will be generated per API request for both success and failed scenarios except account level exceptions or errors(Authorization denied or Payload large etc)

-

statusByDispatch is an array which will have status per dispatchId

-

dispatchId in response is same as passed in request so each dispatchId can be tracked

-

message field will have information in case of partially accepted batch or failed batch

-

dispatchStatus will have 202 for completely accepted batch, 206 for partially accepted and 400 for completely failed dispatch batch

-

4.1.3 Error Scenarios

- For successful API call, HTTP response code 207 Multi-Status code will be returned along with “batchId” and statuses per dispatch. DispatchID for which the records are completely accepted will have dispatchStatus as “202”

- For partially accepted batch, HTTP response code 207 Multi-Status code along with dispatchID for which the records are partially accepted, will have dispatchStatus as “206”

- For any error in API call, HTTP response code 400 and 500 will be returned. 400 for bad request and 500 for internal server error. “message” field will also contain the details of the error encountered

- If API received more than 18000 records in one API call or contains more than 15 Dispatch IDs, it would return 413 Payload too large

- All unauthorized request will be returned with 401 Unauthorized response code

4.2 PII(Personally Identifiable Information) Details

Most businesses collecting feedback from their customers usually care about the sensitivity of any PII data that is being collected and stored along with the customer’s feedback. Additionally, concerns around handling of PII data in the context of running a compliant business.

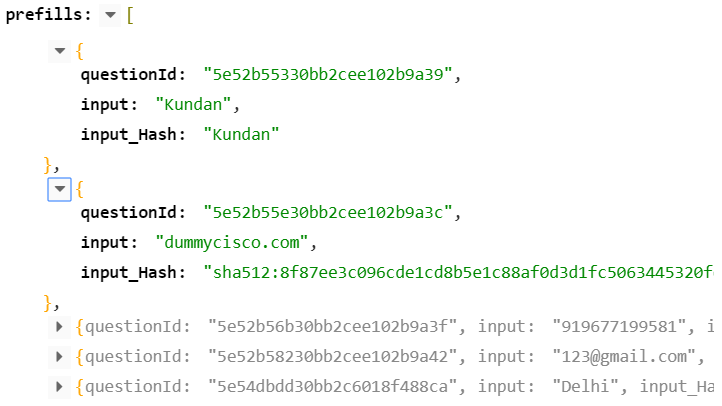

In such cases, ability to restrict PII data storage, or storing it in ways that does not expose this data to certain users like vendors, partners, external support teams, would be useful. Dispatch API provides flexibility to hash the PII records at source and then only pass the information to Experience Management for survey token creation. Experience Management is designed to run with zero PII and anonymous data sets.

Dispatch API while processing a request identifies the records which are marked as PII and chosen to be hashed in Experience Management Portal and create a hashed equivalent of that. The response to prefill questions, that are tagged as PII with PII handling type as Hashing in Experience Management, needs to be hashed.

The option to mark a prefill as PII and choosing the hashing algorithms reside in the Experience Management portal. The hashing algorithms which are supported currently as listed below:

| Algorithm | Hashed Bits Length | Hexa Deciman String Length | Final Output(Always in lower case) |

|---|---|---|---|

| sha256 | 256 | 64 | sha256:{output in hashed} |

| sha384 | 384 | 96 | sha384:{output in hashed} |

| sha512 | 512 | 128 | sha512:{output in hashed} |

In a rare scenario, if the Dispatch API is not able to find the required hashing algorithms using Experience Management APIs and the prefills are tagged to be hashed, then default hashing algorithm sha512 will be used. The flow of PII for a token journey goes as below:

- Dispatch API identifies prefills which are PII and are required to be hashed.

- A DB record is created with a mapping of original value and hashed value

- Dispatch API requests for survey token creation using Experience Management bulk API using the hashed values of prefills(those marked as PII and tagged with “hash” PIIType)

- Experience Management, once the token is created, process the records as per the configured business policy and enqueue the records to configured Queue.

- Dispatcher module dequeues the records, perform validations, and with a database lookup to retrieve the original value of hashed records and dispatches it to end customers using configured SMS/Email vendors.

4.3 Caching Mechanism

Dispatch API caches certain details from Experience Management for a certain time to keep the processing faster and avoid the load on Experience Management APIs. It uses MemoryCache internally which caches the information with assigned expiry in seconds. The following information is cached in the MemoryCache within Dispatch API.

- Authentication Token - Cached for 15 mins for Authenticated tokens. Any Dispatch request which comes with the same Authentication token and available in MemoryCache will not be validated again

- List of dispatches - Cached for an hour. All subsequent requests containing the same dispatchID will be reusing the cached response unless expired. Once expired, a fresh response will be retrieved using Experience Management API and cached again for an hour

- Settings, Delivery Policy, Active Questions, Questionnaire Responses – Cached for an hour. Same as point (b) above.

- Account Configuration – Cached for an hour. Details are retrieved using DB call.

Due to the caching mechanism used here, it is to be noted that the information used by Dispatch API is not real-time with a maximum delay of 1 hour. So, if any changes are made in the Experience Management portal, it won’t reflect in the Dispatch API unless the old cached response is expired, and the details have been fetched again over the API. Also, any changes made in the ACM front-end would take a maximum of 1 hour to reflect in Dispatch API. For example, a new Dispatch ID is created in Experience Management and configured in ACM front-end with vendor details, then the request containing this Dispatch ID will be accepted by Dispatch API only once the Cache is refreshed.

4.4 Extensibility options for In-memory queue

Dispatch Request makes use of In-memory queue to batch the bulk API payloads. In-Memory Queue is considered adequate for CEM use-case, since the loss in the worst case will be 1min (or the configured interval) worth of invites in case this component restarts which is negligible. (Compared to financial transactions, loss of 1 min worth of data (survey data) doesn’t significantly impact the overall Experience metric that an organization is tracking or impact the overall outcome).

However, it can be extended to use persistent queues like Azure storage queue or AWS SQS queue, etc. The advantage to use persistent queue will be 0% loss of records. To achieve this, here are the steps.

- To extend the queue from in-memory to persistent queue, partners can implement this “IBatchingQueue” interface for the “Insert” function.Next step is to make the new object available at run time of Dispatch API controller. So, to achieve this, add the following key-value pair in “AvailableQueues” Dictionary in Starup.cs -> SetConfiguration(). Sample is provided below.

SharedSettings.AvailableQueues.Add("exampleQueue", new ExampleQueueImplementation());

- There is also validation done using AccountConfguration collection to make sure right queue is configured and chosen at run-time. To insert the new queue name in AccountConfguration collection, use the following API.

GET {BaseURL}/api/config/extendedproperties -> Gives the latest configuration available

POST {BaseURL}/api/config/extendedproperties-

Request Body:

{ "BatchingQueue": " exampleQueue ", //Pass same key added in “AvailableQueues” dictionary "Sampler": "wxm", "Unsubscriber": "wxm" } -

Response: 200 HTTP response code with string same as request body

4.5 Sampling

Currently default sampling has not been included in the current release. We will look to add this in the future.

4.5.1 Extensibility options in Sampling

- To extend the sampling logic to support any custom rules, partners can implement this **“ISampler” interface for the “IsSampledAsync”**function.

- Next step is to make the new object available at run time of Dispatch API controller. So, to achieve this, add the following key-value pair in “AvailableSamplers” Dictionary in Starup.cs -> SetConfiguration(). Sample is provided below.

SharedSettings.AvailableSamplers.Add("exampleSmample", new ExampleSamplingLogic());

- There is also validation done using AccountConfguration collection to make sure right sampling logic is configured and chosen at run-time. To insert the new sampling implementation name in AccountConfguration collection, use the following API.

GET {BaseURL}/api/config/extendedproperties -> Gives the latest configuration available

POST {BaseURL}/api/config/extendedproperties-

Request Body:

{ "BatchingQueue": " exampleQueue ", "Sampler": "wxm", // Pass same key added in “AvailableSamplers” dictionary "Unsubscriber": "wxm" } -

Response: 200 HTTP response code with string same as request body

4.6 Cross Channel Token Creation

The Single instance Invitations module (Dispatch request) batches the requests for token creation and runs a background process every 1 min(configurable) to request for token creation using Experience Management bulk token API. Once the survey tokens are successfully created, the Dispatch request API component updates the token details in EventLog collection. This information is then further referred by the Dispatcher module while dispatching the survey invites.

At Experience Management end, once the survey tokens are created, it gets attached to a DP(delivery policy). The DP runs every 5 mins and checks for tokens to be processed within the business hours configured. DP also checks for unsubscription or throttling (survey fatigue rules) and eliminates the tokens which fall in this category. It also attaches the content template of the Email/SMS configured for that Dispatch for which the token is created. In the case of multi-language, the language preference will be attached to the token as one of the prefills based on which the email/SMS content template will be processed in the respective language.

Once all these criteria are successfully met, DP will create a survey record object and push to Azure or AWS queue. From here, the Dispatcher module will be triggered to further process and dispatch the records to end customers using the Email/SMS vendors.

4.7 Unsubscribe

The unsubscribe component resides within the Experience Management product. Once the Cloud hosted components interfaces with the Experience Management delivery policy module to create survey tokens, the Experience Management delivery policy module refers to the “unsubscribe list” it maintains and discards the tokens that are created and has a subsequent unsubscribe entry. Thus, the discarded token doesn’t reach the dispatch queue and hence mails will not be delivered to the unsubscribed customers.

Though the core unsubscribe list is maintained within the Experience Management product, we offer an extensibility option within the partner hosted module. This extensibility hook sits before the cross-channel token creation step. This extensibility option offers the flexibility for partners to refer an API or a 3rd party database maintained by the client themselves or any other global databases and identify certain records in the dispatch request that can be removed from the request. Hence, all such records identified by referencing the 3rd party system, will not even be sent for cross channel token creation thereby suppressing an email/SMS for that customer.

4.7.1 Extensibility options in Unsubscribe

-

To extend the unsubscribe custom check, partners can implement this “IUnsubscribeChecker” interface for the “IsUnsubscribedAsync” function.

-

Next step is to make the new object available at run time of Dispatch API controller. So, to achieve this, add the following key-value pair in “AvailableUnsubscribeCheckers” Dictionary in Starup.cs -> SetConfiguration(). Sample is provided below.

SharedSettings.AvailableUnsubscribeCheckers.Add("exampleUnsubscribe", new ExampleUnSubscribeCheck());

- There is also validation done using AccountConfguration collection to make sure right unsubscribe logic is configured and chosen at run-time. To insert the new Unsusbcribe implementation name in AccountConfguration collection, use the following API.

GET {BaseURL}/api/config/extendedproperties -> Gives the latest configuration available

POST {BaseURL}/api/config/extendedproperties- Request Body:

{

"BatchingQueue": " exampleQueue ",

"Sampler": "wxm",

"Unsubscriber": "wxm" // Pass same key added in “AvailableUnsubscribeCheckers” dictionary

} - Response: 200 HTTP response code with string same as request body

4.8 Mongo DB

For the end-to-end functioning of the Invitations Delivery Module, a variety of data is stored in various MongoDB collections which is inserted, read and updated by the different components of the Invitations Feature Module. The following section details out each collection and its usage:

-

EventLog

- Stores the data with different Event Action such as the trail of actions that have happened on a record. The enum for all Event Action is mentioned below.

- Requested = 0, Rejected = 1, TokenCreated, Sent, Error, Suppressed, DispatchSuccessful, DispatchUnsuccessful, Throttled = 8

- Stores all DIWEF logs generated though the life of Invitations delivery

- Accessed by Web API module(Dispatch and EventLog API + Background scheduler) and Dispatcher module

-

AccountConfiguration

- Stores the vendor credentials and channel details per dispatch.

- Should be configured using ACM(Account Configuration Management) front end

- Data inserted by Account Config Web API and accessed by Dispatch, EventLog API and Dispatcher module

-

BulkMessage

- Stores invitations, whose vendor if of type Bulk-Send, to batch (explained in the next section)

- Inserted, read and updated by the Dispatcher component

4.9 Dispatcher

This component is responsible for the last mile dispatch of the survey invitation to 3rd party email/SMS systems to the intended recipient. Dispatcher supports both Single-Send and Bulk-Send vendors. A Single-Send vendor accepts only 1 invitation per service request, while a Bulk-Send vendor accepts more than 1 invitation (usually upwards of 1K invitations) per service request. The following is the list of vendors whose reference implementations have been provided in the source code:

- CustomSMTP: A Single-Send Email Vendor

- MessageBird: A Single-Send SMS Vendor

- SparkPost: A Bulk-Send Email Vendor

Additionally, through the Dispatcher’s extensibility options (covered later in this section), there is a flexibility to tie up with other vendors of choice.

To directly start utilizing the CustomSMTP/MessageBird/SparkPost option(s), simply provide your SMTP-Server/MessageBird-Account/SparkPost-Account details in the Account Configuration Management

4.9.1 Functioning

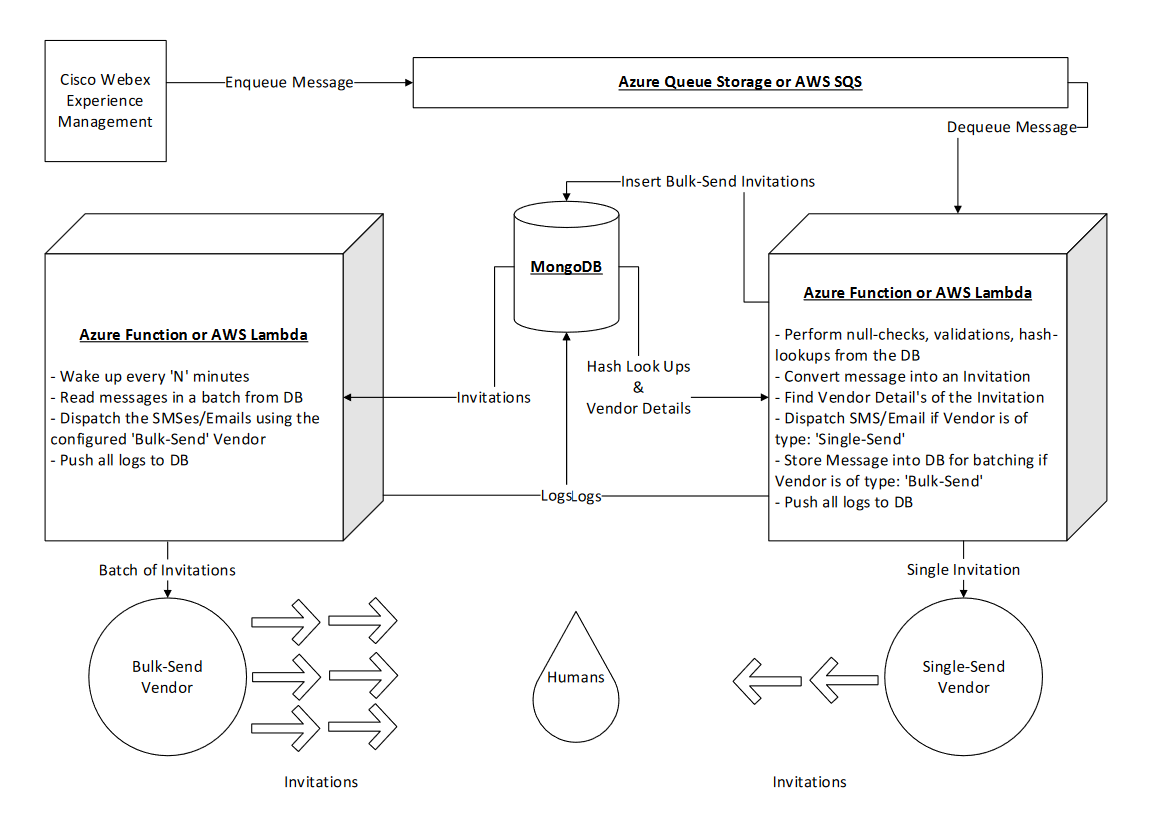

The starting point for this component is the Azure Queue Storage or the AWS SQS into which the Experience Management enqueues all the messages. The following is the lifecycle of these messages:

-

Every message is dequeued one-by-one from the queue using an Azure Function/AWS Lambda (a serverless compute, referred to as Queue-Trigger from here on) that internally utilizes some kind of queue-message based event-trigger to achieve this functionality.

-

Therefore, these event-triggers result in the waking up of the Queue-Trigger, which then performs the required null-checks, validations, and hash lookups on the dequeued message, before ulitmately converting it into a invitation. A personalized email or SMS that contains a unique survey link, which solicits user-feedback, is known as an invitation.

-

Each invitation created has a vendor tied to itself whose details are present in the AccountConfiguration-Collection. Therefore, the Queue-Trigger then reads the respective vendor’s details from the AccountConfiguration-Collection.

-

Now, based on the type of vendor fetched for the given invitation, one of the following occurs:

-

Single-Send Vendor: If the vendor is of this type, then the invitation is immediately dispatched to the vendor for end-delivery to the intended recipient. Once the vendor accepts the invitation, all logs are pushed to the EventLog-Collection, after which the Queue-Trigger goes back to sleep.

-

Bulk-Send Vendor: If the vendor is of this type, then the invitation is stored into the BulkMessage-Collection, for the sole purpose of batching, which ultimately enables a one-shot bulk dispatch of all the batched invitations to the desired Bulk-Send Vendor. Once the database accepts the invitation, all logs are pushed to the EventLog-collection, after which the Queue-Trigger goes back to sleep.

-

- The second serverless compute wakes up every nth minute (referred to as Time-Trigger from here on) via some time based event-trigger to fetch a batch of invitations from the BulkMessage-Collection. This compute then dispatches the retrieved batch of invitations to the Bulk-Send Vendor for their respective individual deliveries to all the intended recipients. On acceptance of these invitations, all logs are pushed to the EventLog-Collection, after which the Time-Trigger goes back to sleep.

Therefore, the Dispatcher supports the following two kinds of Dispatch-Mechanisms:

- Single Dispatch-Mechanism (via Single-Send vendors): For handling a low volume of invitation deliveries

- Bulk Dispatch-Mechanism (via Bulk-Send vendors): For handling a high volume of invitation deliveries

4.9.2 Source Code

Links to the two serverless computes’ source code is presented below:

Azure:

- Queue-Trigger - an Azure Function that uses Azure Queue Storage Triggers as its event-trigger

- Time-Trigger - an Azure Function that uses Azure Time Triggers as its event-trigger

AWS:

- Queue-Trigger - an AWS Lambda that uses AWS SQS as an Event Source as its event-trigger

- Time-Trigger - an AWS Lambda that uses AWS CloudWatch-Events as an Event Source as its event-trigger

4.9.3 Scalability and Extensibility

The Dispatcher has been made highly customizable, where you can choose to horizontally and/or vertically scale the serverless computes to handle a variety of dispatch loads. In addition to this, you can choose to integrate with your choice of Single-Send/Bulk-Send Vendor(s) as well.

-

Scalability of the Queue-Trigger Serverless Compute -

-

Vertical Scaling:

- Azure: A single Queue-Trigger Azure Function can vertically scale to process upto 48 (with batch-size set to 32) messages concurrently. The value of the batch-size, which decides the max concurrency limit, can be changed in the host.json file of the provided Azure Queue-Trigger source code. Official Reference.

Source Code's Batch-Size = 32- AWS: A single Queue-Trigger AWS Lambda can vertically scale to process upto 10 messages concurrently. This value of Batch-Size is set in the 10th-step of your AWS Lambda deployment. Official Reference

Source Code's Batch-Size = 10 -

Horizontal Scaling: As we utilize a serverless architecture, the Queue-Trigger Azure Function/AWS Lambda can be naturally horizontally scaled by deploying more than 1 instance of it.

-

-

Scalability of the Time-Trigger Serverless Compute -

-

Vertical Scaling: A single Time-Trigger Azure Function/AWS Lambda can be vertically scaled in two ways. Firstly, one can modify the number of Bulk-Send invitations that are processed by the Time-Trigger at once. This can be done by changing the Bulk-Read-Size property of the Time-Trigger. Secondly, one can modify the wake-up frequency of the Time-Trigger. This can be done by changing the CRON expression of the time based event-trigger.

- Azure: The Bulk-Read-Size property and the CRON expression both can be changed in the trigger.cs file of the provided Azure Time-Trigger source code. To change the Bulk-Read-Size property, alter the respective

DispatchHandler'sconstructor argument from10000to a value of your choice. To change the CRON expression, modify theRunAsyncfunction’sTimeTriggerparameter from0 */5 * * * *to a value of your choice. - AWS: The Bulk-Read-Size property can be changed in the trigger.cs file of the provided AWS Time-Trigger source code, where as the CRON expression is set in the 12th-step of your AWS Lambda deployment. To change the Bulk-Read-Size property, alter the respective

DispatchHandler'sconstructor argument from10000to a value of your choice.

Source code's Bulk-Read-Size = 10000, CRON expression = every 5 minutes - Azure: The Bulk-Read-Size property and the CRON expression both can be changed in the trigger.cs file of the provided Azure Time-Trigger source code. To change the Bulk-Read-Size property, alter the respective

-

Horizontal Scaling: As we utilize a serverless architecture, the Time-Trigger Azure Function/AWS Lambda can be naturally horizontally scaled by deploying more than 1 instance of it, where each compute wakes up at a different instant to read from the BulkMessage-Collection. Every time it wakes up, it will read and update the status of a batch of Bulk-Send invitations, which consequently allows other Time-Trigger instances to pick up an another batch of invitations from the same collection, while the already running Time-Triggers are still processing their batch. After a bulk-dispatch is made, a Time-Trigger then deletes its batch of Bulk-Send invitations from the BulkMessage-Collection.

However, as the [Bulk-Find-and-Update] operation, which is responsible for reading and updating the status of a batch of invitations, of a Time-Trigger isn't atomic, no two [Bulk-Find-and-Update] operations should overlap. Therefore, a new Time-Trigger should only wake up when the [Bulk-Find-and-Update] operation of all the currently running Time-Tiggers have been completed.

-

-

Vendor Extensibility - One can integrate with their choice of vendor by implementing either the ISingleDispatchVendor or the IBulkDisapatchVendor interfaces provided in the source code. To integrate with a new

Single-Send Vendor(reference implementations: CustomSMTP and MessageBird), an object of typeISingleDispatchVendoris required to be added to your Queue-Trigger Serverless Compute’s runtime. This is done via theTrigger.csfile that can be found in the provided Azure/AWS Queue-Trigger source code, where you would use theDispatchHandler'sAdditional-Dispatch-Creator-Strategies contructor argument to do this. To integrate with a newBulk-Send Vendor(reference implementation: SparkPost), an object of typeIBulkDispatchVendoris required to be added to your Time-Trigger Serverless Compute’s runtime. This is also done via the Trigger.cs file that can be found in the provided Azure/AWS Time-Trigger source code, where you would again use theDispatchHandler'sAdditional-Dispatch-Creator-Strategies contructor argument to do this.

- Logging Extensibility:

One can provide the desired level of logging (1=Failure; 5=Debug) right from the Trigger.cs file of the provided Azure/AWS Queue-Trigger source code and Azure/AWS Time-Trigger source code. To change the Log-Level property, alter the respective

DispatchHandler'sconstructor argument from5to a value of your choice.

4.9.4 Caching

To improve the performance of the Dispatcher component, the Account-Configuration is cached when the first ever message trigger is fired. These details are then shared amongst all subsequent message trigger invocations by creating a singleton which is made to persist in the serverless compute’s memory for the entire duration of its lifetime. Therefore, any change made in the Account-Configuration via the Account Configuration Management component, warrants a restart of all the existing Queue-Trigger(s). As the Time-Trigger(s) don’t have a dependency on the Account-Configuration, one doesn’t need to restart them.

4.9.5 Dispatch Failure

In the highly unlikely scenario of a dispatch failure, which could occur due to misconfigurations, 3rd party vendor issues or an internal exception, the invitation(s) will be lost. This is due to the light-weight nature of this component. However, through the Notifications component, one will be made aware of this in real-time/EOD, while the extensive logging would help one to debug the root cause in such a scenario and stop it from recurring.

4.9.6 Dead Letter Queues and Messages

In Azure if there is an internal exception while dequeue-ing a message from the Queue Storage, the message will result in an error. However the message’s dequeue count still increases by one. Such errors are very rare and might occur due to an exception in PaaS management of the Queue/Azure Function by Azure. Nonetheless through the MaxDequeueCount set in the host.json, the message will be dequeued another 4 more times before it moves to a dead letter queue. Now, Azure creates a dead letter queue automatically. For ex, if your queue name is “invitations”, these errored messages would end up in a queue called “invitations-poison” after they have been attempted for dequeue-ing for the set number of times, which is the MaxDequeueCount property in the host.json file. This queue is not provisioned manually and is automatically created in the same storage account on the first occurance of a dead letter message.

In the case of AWS we need to create a dead letter queue ourselves. Even here messages will throw an error if there is some exception in the PaaS Management of the SQS/Lambda by AWS. Additionally, if the Lambda itself gets throttled, any messages which were not acceoted by the Lamda instances on dequeue-ing will also result in an error. You can refer to this official documentation which captures this issue. However, like Azure, these messages are attempted to be dequeued for a set number of times, which is the Maximum Receives property of the SQS that is created as the Lambda’s event source. You set your own choice of value at this deployment step.

Dead leter messages should be a very rare occurence, even in the case of the AWS Lambda getting throttled. We have set a high visibility timeout for SQS messages to avoid this. Nonetheless, it is advisable to monthly check if you are seeing any dead letter messages in the Azure Poison or the AWS Dead Letter Queue.

4.10 Initiator

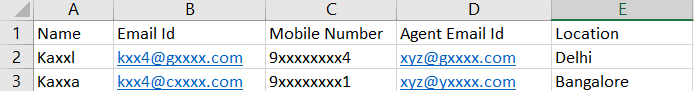

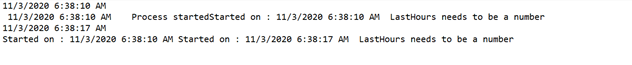

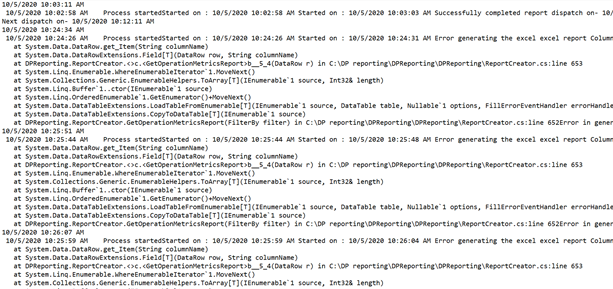

This component is responsible for the converting a meaningful .csv or .xlsx file, which is provided by the client, into a valid Dispatch Request API that consequently initiates the entire Invitation-Delivery workflow that begins at the Dispatch Request Component. The meaningful .csv or .xlsx files, here on referred to as target files, contain invaluable information that allows the component to form the required HTTP Request Body of the Dispatch Request API. This, is done according to the API’s requirements that have been detailed out here.

The structure of the target files is as follows:

- Sample .csv Target File:

Name, Email Id, Mobile Number, Agent Email Id, Location

Kaxxl, kxx4@gxxxx.com, 9xxxxxxxx4, xyz@gxxxx.com, Delhi

Kaxxa, kxx4@cxxxx.com, 9xxxxxxxx1, asd@yxxxx.com, Bangalore

- Sample .xlsx Target File:

Essentially, each row (apart from the first row) in the target file contains the answer part of the pre-filled data that is sent to the Dispatch Request Component and therefore, each row translates into a unique invitation. Whereas, the first row of the target file, also known as the header row, contains column names that each individually represent the question part of the pre-filled data. The mapping of these column names to valid XM Question Ids is configured and stored in XM.

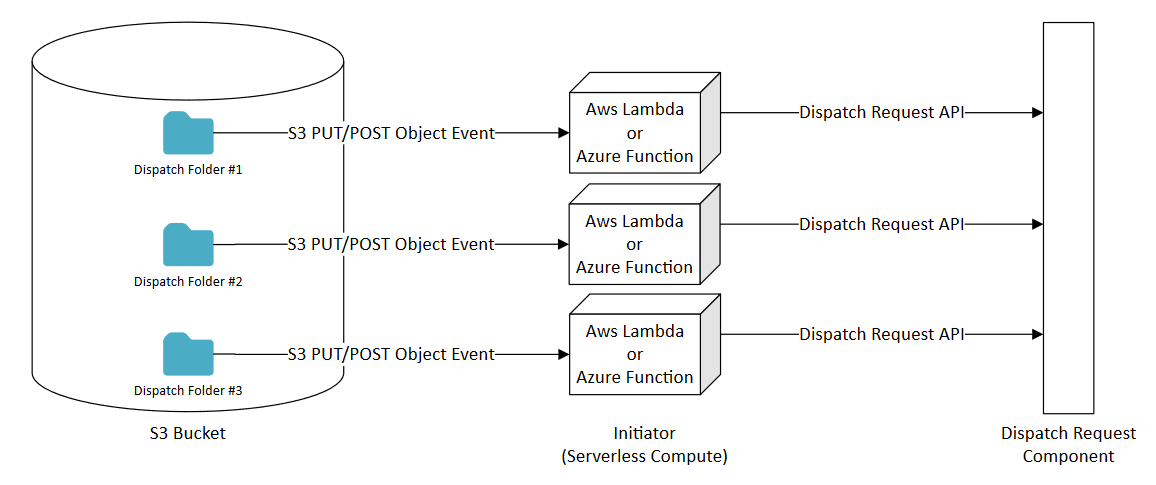

4.10.1 Functioning

The starting point for this component is always an AWS S3 Bucket to which the clients upload their target files. Once target files are added to the S3 Bucket, using S3 Events a serverless compute (An AWS Lambda or an Azure Function) is triggered, which then consumes the newly uploaded target file.

For this component to work, each Dispatch created by the client in XM has to have its own unique folder/directory in the S3 Bucket into which clients will upload the target file along with a mandatory config.json file. The config.json file is uploaded only once and is reused across all the subsequent target file uploads. The config.json is required to have the following structure:

{

"DispatchId":"5xxxxxxxxxxxxxxxxxxa3624",

"DispatchReqApi":"https://xxxxxx.xxxxxxx.xxx:8xxx"

}Here the DispatchId represents the unique identifier of Dispatch that was created in XM and the DispatchReqApi represents the URL where the Dispatch Request Component has been deployed.

Once the trigger is invoked, the serverless compute downloads both the Target file and and its corresponding Config file from the same folder of the configured S3 Bucket. Immediately after the target file has been downloaded, a request is made to S3 to archive only the Target file. As a result of this opertation, the target file ends up in a sub-folder called Archive that is present inside the Dispatch Folder. This is the same Disaptch Folder into which the target file was initially uploaded.

After performing null checks and important validations on the corresponding Dispatch, the target file downloaded into the serverless compute’s memory and is converted into its equivalent Dispatch Request API. After a HTTP response is received from the Dispatch Request Component for the HTTP Request made by the component, all logs are pushed to the Database before the compute goes back to sleep. And this marks the end of the Initiator Component’s workflow.

4.10.2 Source Code

Links to the serverless compute’s source code is presented below:

- AwsRequestInitiator - an AWS Lambda that uses AWS S3Events as its event-trigger.

- AzureRequestInitiator - an Azure Function that uses the combination of AWS S3Events, AWS SNS Notifications, and Azure HTTP-Triggers as its event-trigger

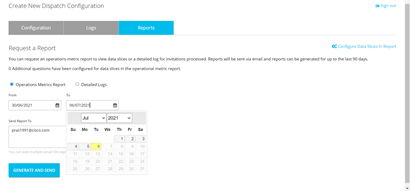

5. Account configuration management

There is a web-based application with a user interface developed that should be used to create a new dispatch configuration. The User Guide covers the various features covered in this front-end application and how they can be configured.

As explained under the “Dispatcher” section, one may configure email vendor details or SMS vendor details to send the emails or SMS to the customers. We have provided out of the box reference implementations with Custom SMTP, Sparkpost, and Messagebird. However, Partners have the option to add new Email or SMS vendors based on the needs of the clients that can be used to send emails or SMS. To extend this addition and enable the Account configuration management front end to choose these new vendors and configure them, the following can be done.

5.1 Extensibility instructions to add new vendor

A new vendor can be added to the portal manually. Follow the steps below.

Create New Vendor Modal Form

In the file config.html , find the commented section Add new vendor in a modal here . Add a new form with pop up modal id. Add the required fields as per the API requirements as shown in the example.

<div class="form__group">

<label for="vendorAPIKey" class="form__label">Api Key</label>

<input type="text" id="vendorAPIKey" name="ApiKey" value="" class="form__field" required

placeholder="Enter Api Key">

</div>Add a cancel and Save button within this form like other.

Add a selector input mapping

Map your input fields with the id that is assigned to it in the form. This has to be done to convert the data to a JSON while saving/editing. Ensure the input elements has name that matches with the key of JSON data that needs to be saved. In the above example, it follows

data = { ApiKey: '****' }Create Read Only View

Once the Modal form is designed with the required form fields, we now create the read only view. Add the new vendor name in the dropdown. Find the block commented as Add new Email Vendor name for dropdown selection . Add a new entry for your vendor to appear in the selection. Add accordingly the SMS vendor name to SMS vendor dropdown.

Now add the block to show the values under New Vendor Read Only View . Add the data to be displayed as shown in the example.

<h4> URL </h4>

<span id="newVendorURL"></span>Load data on selection

Now that the Read only form is ready, add a function to load the vendor specific data from the backend like sparkpost.

Under function onEmailSelectChange() in main.js , add a condition to check if the selected value matches the newly added vendor id in the dropdown. If so then call the function to get data from the backend. This new function will get the data via ajax API call and set the data to Read only form elements we created in previous step. Refer getSparkPostData for help.

Open the modal pop up

Adding the vendorName to the drop down will automatically wire the edit button. On clicking the edit button, we will need to show the modal pop up for user to edit. In config-file.html add the new vendor related code below

Add the new vendor modal with right ID

Get the cancel button id from the form that we just added. Create an onclick handler to clear the form elements within the form.

Add the callback to open the form pop up like shown below.

if (document.getElementById('getVendorSms').value == "newVendorModalId") { getNewVendorData();

$("body").css({"overflow": "hidden"});

newVendorModal.style.display = "block";

}

Save data from the form

Add a callback to the save button within the new modal form. This new function will validate the data in the form. Collect the data. Serialize it to a JSON and make API call to the backend. Utility functions like emailFormat , required and serialize will come in handy to make these calls. Use vendorEmailUpdateAPI() function for reference and ensure the data is posted to backend in the required format.

6. Logging and Notifications

6.1 Logging

Overview of logging architecture and system components used for logging

Logging is a critical part of the architecture as this contributes heavily to the serviceability aspects. All necessary events in the form of “Mongo DB documents” are being captured and pushed to a Mongo DB collection for the components in the partner hosted module. On the Experience Management end, all events are being captured in “Event Hub”.

There are 2 broad categories of events:

- Events that are logged purely for contributing towards reporting when reports are to be built out

- Events that are used for troubleshooting and serviceability

The second category of events are all duly categorized into one of the following:

-

Debug (D): With DEBUG, you start to include more granular, diagnostic information. Here, you’re probably getting into “noisy” territory and furnishing more information than you’d want in normal production situations. You’re providing detailed diagnostic information for fellow developers, sysadmins, etc.

-

Information (I): INFO messages correspond to normal application behavior and milestones. You probably won’t care too much about these entries during normal operations, but they provide the skeleton of what happened. A service started or stopped. You added a new user to the database. That sort of thing.

-

Warning (W): You use the WARN log level to indicate that you might have a problem and that you’ve detected an unusual situation. Maybe you were trying to invoke a service and it failed a couple of times before connecting on an automatic retry. It’s unexpected and unusual, but no real harm done, and it’s not known whether the issue will persist or recur. Someone should investigate warnings.

-

Error (E): An ERROR is a serious issue and represents the failure of something important going on in your application. Unlike FATAL, the application itself isn’t going down the tubes. Here you’ve got something like dropped database connections or the inability to access a file or service. This will require someone’s attention probably sooner than later, but the application can limp along.

-

Fatal (F): FATAL represents truly catastrophic situations, as far as your application is concerned. Your application is about to abort to prevent some kind of corruption or serious problem, if possible. This entry in the log should probably result in someone getting a 3 AM phone call.

6.1.1 Dispatch Request Logs

| Scenarios | Result | Level | Email to group level | Log Message/Email Body |

|---|---|---|---|---|

| Account Configuration not set-up | API request will be rejected | E | Account | Dispatch API cannot process the incoming request because the Dispatches are not yet configured in ACM front-end. Please configure this by logging into Account Configuration Module. |

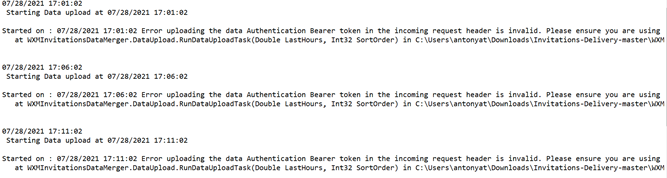

| Authentication failed | API request will be rejected | E | Account | Authentication Bearer token in the incoming request header is invalid. Please ensure you are using correct user credentials or a valid Authentication Bearer token. |

| Max Record size Exceeded | API request will be rejected | E | Account | The API cannot process more than 18,000 records in a single request. Please split the batch into multiple requests and try again. Total records received is - |

| max dispatch supported exceeded | API request will be rejected | E | Account | The API cannot process more than 15 dispatches in a single request. Please ensure dispatch requests are spread out over time. Total dispatches received is - |

| Sampling type is not configured | Sampling wont happen on the payload | W | Account | Sampling type is not configured hence all records in the payload are processed. Please ensure sampling type is configured in ACM backend using the “extendedproperties” API. |

| WXM API Failed for dispatch/questions/DP/Settings/Questionnaire | API request will be rejected | E | Account | Dispatch, Delivery Policy , Questionnaire, Active Questions or Settings not found. Please ensure the dispatch configured on partner hosted side is available in Experience Management. |

| failed to get dispatch from memory cache or WXM platform | Complete batch will fail | E | Account | Dispatch not found. Please ensure the dispatch configured on partner hosted side is available in Experience Management. |

| failed to get Delivery Plan from memory cache or WXM platform | Complete batch will fail | E | Account | Delivery Policy not found. Please ensure the dispatch configured on partner hosted side is available in Experience Management. |

| failed to get Active Questions from memory cache or WXM platform | Complete batch will fail | E | Account | Active Questions not found. Please ensure the dispatch configured on partner hosted side is available in Experience Management. |

| failed to get Settings from memory cache or WXM platform | Complete batch will fail | E | Account | Settings not found. Please ensure the dispatch configured on partner hosted side is available in Experience Management. |

| failed to get Survey Questionnaires from memory cache or WXM platform | Complete batch will fail | E | Account | Survey Questionnaire not found. Please ensure the dispatch configured on partner hosted side is available in Experience Management. |

| Exception getting Dispatch/deliverPlan/Question from cache and If not found from WXM | Complete batch will fail | F | Account | Getting API details from MemoryCache failed + { Exception } |

| Unknown exception within a Batch Request in controller | Complete batch will fail | F | Account | Exception in DispatchRequest Controller + {Exception} |

| Unknown exception while validating the Batch Request | Complete batch will fail | F | Account | Exception in ProcessInvitation in DispatchRequest Controller + {Exception} |

| No hashing algo configured | Hashing algo not found from WXM API, so switching to default | D | Dispatch | Algorithm for hashing of PII data is either missing or configured incorrectly. Default hashing algorithm SHA512 is being used for now as fallback. Please check and use a valid algorithm in Experience Management. |

| Hash Algo configured | Valid Hash Algo found | D | Dispatch | Hashing algorithm for PII is configured as: |

| Exception while validating every dispatch | single to many dispatch can fail | F | Account | Exception in CheckDispatchID + {Exception} |

| Dispatch id passed is not a valid one created in WXM | A dispatch will be ignored from the batch | E | Account | Dispatch passed in the API request is not valid. Please ensure a valid dispatch is configured in Account Configuration Module and same is passed in the API request as well. |

| A dispatch request is passed which is not configured in the partner hosted end through SPA | All records in the dispatch will be rejected | E | Account | Dispatch configuration is missing in Account Configuration Module. Please ensure a valid dispatch is configured in Account Configuration Module and same is passed in the API request as well. |

| Dispatch is paused | A dispatch will be ignored from the batch and not process | E | Dispatch | Dispatch configured to be used to send invites is paused. Invites will not go out unless this is resolved. Please sign in to Experience Management and un-pause the Dispatch. Also note, any changes in Experience Management may take up to an hour to reflect in Dispatch Request API. |

| Dp is paused | A dispatch will be ignored from the batch | E | Dispatch | Delivery Policy configured under Dispatch to be used to send invites is paused. Invites will not go out unless this is resolved. Please sign in to Experience Management and un-pause the Delivery Policy. Also note, any changes in Experience Management may take up to an hour to reflect in Dispatch Request API. |

| UniqueId question missing in DeliveryPlan | A dispatch will be ignored from the batch | E | Dispatch | Unique User Indentifier (UUID) configured in Delivery Policy used in Dispatch is not availabel in the questionnaire. This will impact fatigue rules and invites may go out to customers for multiple surveys. Please ensure the UUID is added as a pre-fill question in the questionnaire. |

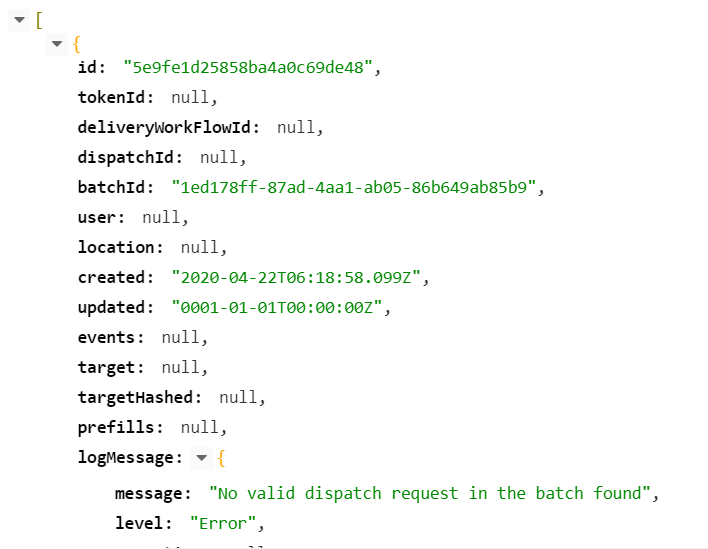

| No valid dispatch request in the batch found | Batch failed | E | Account | No valid dispatch in the batch found. Please setup a valid dispatch configuration in Account Configuration Module. |

| Unknown Exception in a Dispatch while validation | A dispatch out of batch will fail | F | Account | Exception in a Dispatch in CheckDispatchData + {Exception} |

| Unknown Exception in overall Dispatch while validation | Batch failed | F | Account | Exception in CheckDispatchData + {Exception} |

| Exception validating DP and getting valid channels | Batch failed | F | Account | Exception in GetChannelFromDP + {Exception} |

| Invalid or unsupported channels configured in dispatch | A dispatch will be ignored from the batch | E | Dispatch | An error occured while using the Delivery Policy configured under Dispatch . Please make sure the Delivery Policy is configured correctly with supported channels. |

| records added to internal queue for bulk import | Success | D | Dispatch | {prefill Responses?.Count} records accepted for further validation |

| All records are rejected due to invalid channel or data | A dispatch will be ignored from the batch | E | Dispatch | All the records received in the API requests are rejected. Please ensure the channels and UUID are configured correctly in the Delivery policy in Experience Management. Also ensure the Email or SMS values are sent in correct format. |

| Invalid prefill for a record | Prefill will be skipped and token will be created | W | Dispatch | Some of the prefills with following question IDs are ignored while processing the records. This may be due to missing question in the questionnaire. Please verify and reconfigure this in the Experience Management. <Prefill QID 01> <Prefill QID 02> |

| Record Rejected due to Invalid or missing Email or Mobile number | Tokens for the record will not be created | F | Report | Invites were not sent to the following records from the data file: <UUID 01> <UUID 02>… |

| Record rejected due to Common Identifier missing | Tokens for the recod will not be created | F | Report | Invites were not sent for some records with missing email or mobile number. Please check the data file for missing data for more details. |

| Record is Suppressed | Tokens for the record will not be created | I | Report | Invites were not sent to the following records from the data file: <UUID 01> <UUID 02>… |

| Record is suppressed due to sampling rules | For supressed records, tokens won’t be created | I | Report | Invites were not sent to the following records from the data file as per fatigue rules. Below is a list of records for which invites were not sent. <UUID 01> <UUID 02>… |

| Bulk request failed due to Inactive or Invalid Dispach | No tokens will be created and hence nothing will be sent. All records in a dispatch will fail | E | Account | Invalid or inactive Dispatch requested. Please ensure the Dispatch is configured correctly in Webex Eperience Management and is not paused |

| Bulk request failed due to Inactive or Invalid DeliveryPlan | No tokens will be created and hence nothing will be sent. All records in a dispatch will fail | E | Account | Invalid or inactive DeliveryPlan. Please ensure the DeliveryPlan is configured correctly in Webex Eperience Management and is not paused |

| Bulk request failed due to Invalid token Template | No tokens will be created and hence nothing will be sent. All records in a dispatch will fail | E | Account | Invalid Token Template attached to Dispatch. Please ensure the Token Template is configured correctly and added to Dispatch. |

| Bulk request failed due to Invalid content template | No tokens will be created and hence nothing will be sent. All records in a dispatch will fail | E | Account | Invalid Message Template configured in Dispatch. Please ensure Message Template is configured correctly and added to valid channels against each Message. |

| Bulk request failed to WXM | No tokens will be created and hence nothing will be sent. All records in a dispatch will fail | E | Account | Request to send invites failed due to an error. Please check the configuration for Delivery Policy, Token Template, Message Templates and Dispatch in Experience Management. |

| Queue for batching is missing | Whole batch will fail | E | Dispatch | Queue to batch the records for bulk token creation is not found. Please verify and correct the batching queue type using “extendedProperties” API in Account Configuration Management. |

| x number of record for a batch id is pushed to Queue for bulk token generation | Success | D | Dispatch | {x} records added to queue {Queuename} for bulk token generation |

| Dispatch Completely accepted for processing | Success | D | Dispatch | Accepted for processing {count} records |

| few records from the dispatch is accepted for processing | Success | D | Dispatch | Accepted for processing {Count}, Rejected {Count} |

| Multiple status returned | Success | D | Account | Multiple dispatch status returned in response |

| Error while making the API request to WXM | Batch/Token creation failed | E | Account | “$"“StatusCode: {responseMessage.StatusCode} "” + $"“ResponseMessage: {responseMessage.ToString()} Url: {url}” |

| Unknown Exception while making API request to WXM | Batch/Token creation failed | F | Account | HTTP Send failed for HTTPWrapper sendAsync + {Exception} |

| Auth token not generated for Bulk Token API in Background task | Token creation failed | E | Account | Authentication Bearer token not generated. Please ensure you are using correct user credentials. |

| DB update of bulk token | Token creation and updated in DB | D | Account | Update to DB completed for bulk token response of size: {Count} |

| Exception while generating bulk token creation | Token creation failed | F | Account | Bulk Token API failed due to unknown exception + {Exception} |

6.1.2 Dispatcher Logs

| Scenarios | Result | Level | Email to group level | Log Message/Email Body |

|---|---|---|---|---|

| Queue-Trigger has dequeued the invitation | Invitation is under processing | D | Dispatch | Dequeued |

| Queue-Trigger has validated the invitation against Null-Checks | Invitation is under processing | D | Dispatch | Validated (Additional Token Parameters: ____) |

| Queue-Trigger has invalidated the invitation due to failure against Null-Checks | Invitation won’t be dispatched | E | Dispatch | Invalidated as Token ID, Batch ID or Dispatch ID is not available |

| Queue-Trigger has failed to identify invitation’s channel as both email Id and mobile number are attached with the invitation | Invitation won’t be dispatched | E | Dispatch | Channel couldn’t be inferred as both email ID and mobile number are available |

| Queue-Trigger has failed to identify invitation’s channel as both email Id and mobile number aren’t attached with the invitation | Invitation won’t be dispatched | E | Dispatch | Channel couldn’t be inferred as both email ID and mobile number are not available |

| Queue-Trigger has correctly identified invitation’s channel as Email | Invitation is under processing | D | Dispatch | Channel inferred as Email |

| Queue-Trigger has correctly identified invitation’s channel as SMS | Invitation is under processing | D | Dispatch | Channel inferred as SMS |

Queue-Trigger has failed to find the invitation’s corresponding EventLog-Object from the EventLog collection |

Invitation won’t be dispatched | E | Dispatch | Corresponding Event Log was not found in the database |

Queue-Trigger has successfully found the initation’s corresponding EventLog-Object from the EventLog collection |

Invitation is under processing | D | Dispatch | Corresponding Event log was found (id: _____) |

Queue-Trigger has prepared the invitation’s Hash-Look-Up Dictionary for performing the PII replacements |

Invitation is under processing | D | Dispatch | Corresponding Hash Look-Up Dictionary has been configured |

Queue-Trigger couldn’t find the invitation’s dispatch details within the AccountConfiguration collection |

Invitation won’t be dispatched | E | Dispatch | Corresponding Dispatch was not found in the Account Configuration Module |

Queue-Trigger has found the dispatch’s vendor name within the AccountConfiguration collection |

Invitation is under processing | D | Dispatch | Corresponding Dispatch’s Vendor Name has been found (name: ______) |

Queue-Trigger couldn’t find the dispatch’s vendor name within the AccountConfiguration collection |

Invitation won’t be dispatched | E | Dispatch | Corresponding Dispatch’s Vendor Name is missing from the Account Configuration Module |

Queue-Trigger has found the vendor details within the AccountConfiguration collection |

Invitation is under processing | D | Dispatch | Corresponding Vendor Details are available (vendor details: ______) |

Queue-Trigger couldn’t find the vendor details within the AccountConfiguration collection |

Invitation won’t be dispatched | E | Dispatch | Corresponding Vendor Details are missing from the Account Configuration Module |

Queue-Trigger has found the vendor type to be Bulk-Send |

Invitation is under processing | D | Dispatch | Corresponding Vendor is of type Bulk-Send. Invitation will now be inserted into the database. |

Queue-Trigger has found the vendor type to be Single-Send |

Invitation is under processing | D | Dispatch | Corresponding Vendor is of type Single-Send. Invitation will now be prepared for dispatched. |

| Queue-Trigger/Time-Trigger has found the vendor implemention object in its runtime | Invitation is under processing | D | Dispatch | Corresponding Vendor implemetation object was found in the serverless compute’s memory (vendor details: ______) |

| Queue-Trigger/Time-Trigger has failed to find the vendor implementation object in its runtime | Invitation won’t be dispatched | E | Dispatch | Corresponding Vendor implemetation object was not found in the serverless compute’s memory |

| Queue-Trigger/Time-Trigger has successfully dispatched the invitation to the required vendor for end-delivery | Invitation has been successfully dispatched | I | Dispatch | Successfully Dispatched (via: _______) |

| Queue-Trigger/Time-Trigger has failed to dispatch the invitation to the required vendor for end-delivery | Invitation has failed to dispatch | E | Dispatch | Failed at Dispatch (via: _______) |

| Queue-Trigger/Time-Trigger has run into a Internal-Exception | Invitation has failed to dispatch | F | Dispatch | Internal Exception + Details about the exception |

Time-Trigger has read the Bulk-Send invitation from the database for processing |

Invitation is under processing | D | Dispatch | Read from database into memory (Bulk-Send Vendor: _______) |

| Time-Trigger has started | Bulk-Send invitations will be processed, given there is a presence of such invitations in the BulkMessage collection |

D | Account | Time Trigger Serverless Compute has now started |

| Time-Trigger has ended | Bulk-Send invitations have been processed, given there was a presence of such invitations in the BulkMessage collection |

D | Account | Time Trigger Serverless Compute has now ended (Invitations Processed: _______) |

| Time-Trigger started later than intended | - | W | Account | Time Trigger Serverless Compute is running late |

6.1.3 Initiator Logs

| Scenarios | Result | Level | Email to group level | Log Message/Email Body |

|---|---|---|---|---|

| A target file is uploaded to the S3 Bucket | Request to initiate a dispatch is accepted by the Initiator | D | Account | A request to initiate a dispatch was received from the S3Bucket |

| The target file is uploaded to the S3 Bucket but into a directory | Request to initiate the dispatch is aborted | E | Account | The dispatch cannot be initiated as the uploaded file wasn’t uploaded into a directory as required |

| The config.json cannot be retrieved from the S3 Bucket | Request to initiate the dispatch is aborted | E | Account | The required config.json couldn’t be retrieved from the S3Bucket. Reason => _______ |

| The config.json retrieved is empty | Request to initiate the dispatch is aborted | E | Account | The retrieved config.json was Empty |

| The config.json retrieved is not empty | Request to initiate the dispatch is under processing | D | Dispatch | The required config.json was successfully retrieved from the S3Bucket |

| The target file cannot be retrieved from the S3 Bucket | Request to initiate the dispatch is aborted | E | Dispatch | The uploaded file couldn’t be retrieved from the S3Bucket. Reason => _______ |

| The target file retrieved is empty | Request to initiate the dispatch is aborted | E | Dispatch | The retrieved uploaded file was Empty |

| The target file retrieved is not empty | Request to initiate the dispatch is under processing | D | Dispatch | The uploaded file was successfully retrieved from the S3Bucket |

| The config.json retrieved is configured incorrectly | Request to initiate the dispatch is aborted | E | Dispatch | The required config.json hasn’t been configured correctly. Please check again! |

| The config.json retrieved is configured correctly | Request to initiate the dispatch is under processing | D | Dispatch | The required config.json has been configured correctly |

| Incorrect account credentials are stored in the DB | Request to initiate the dispatch is aborted | E | Dispatch | The login into WXM failed. As a result, a Bearer-Token couldn’t be fetched |

| Correct account credentials are stored in the DB | Request to initiate the dispatch is under processing | D | Dispatch | The login into WXM succeeded. As a result, a Bearer-Token was fetched |

| The config.json contains an unknown/incorrect dispatch-id | Request to initiate the dispatch is aborted | E | Dispatch | The config.json specifies an unknown Dispatch-Id |

| The config.json contains a valid dispatch-id corresponding to a Not-Live dispatch | Request to initiate the dispatch is aborted | E | Dispatch | The config.json specifies a Not-Live Dispatch |

| The config.json contains a valid dispatch-id corresponding to a dispatch that has no prefill-questions | Request to initiate the dispatch is aborted | E | Dispatch | The config.json specifies a Dispatch with no associated Questions |

| The config.json contains a valid dispatch-id corresponding to a disaptch that is Live and has prefill-questions | Request to initiate the dispatch is under processing | D | Dispatch | The required Dispatch details, along with its Questions, were successfully fetched from XM |

| None of the target file headers can be mapped to a WXM Question | Request to initiate the dispatch is aborted | E | Dispatch | The uploaded file cannot be processed as none of the headers could be mapped to a Question-Id that belongs to the corresponding Dispatch. Available Headers in XM for the corresponding Dispatch: _______ |

| The target file contains duplicate headers | Request to initiate the dispatch is aborted | E | Dispatch | The uploaded file cannot be processed as it has the following duplicate headers: _______ |

| The target file contains no duplicate headers and 1 or more headers are mapped to a WXM Question | Request to initiate the dispatch is under processing | I | Dispatch | The uploaded file’s headers are ready for processing. Details => _______ . Available Headers in XM for the corresponding Dispatch: _______ |

| The target file has no valid answer rows (in case of a .csv file) or has no answer rows | Request to initiate the dispatch is aborted | E | Dispatch | The uploaded file cannot be processed as none of the rows are correctly configured. |

| The target file has 1 or more (valid) rows | Request to initiate the dispatch is under processing | I | Dispatch | The uploaded file’s rows are ready for processing. Details => _______ |

| The dispatch API server does not return a 2xx response | Request to initiate the dispatch is unsuccessful | E | Dispatch | The HTTP Response received |

| The dispatch API server does return a 2xx response | Request to initiate the dispatch is successful | I | Dispatch | The HTTP Response received |

6.1.4 How to access Logs using API

We have exposed API’s that can be used to pull the logs from both Partner hosted side and from Experience Management Side.

- Prerequisites to access EventLog API

- Experience Management Bearer token should be passed in the request header for authorization

- Deployment of Web API to get the Base URL

- Method: POST

- API URL: Base URL + /api/EventLog

- Authorization:

- To access this API, bearer token should be retrieved using Experience Management LoginToken API and should be passed under “Authorization” header as bearer token

-

API request

{ "BatchId": "", "DispatchId": "", "Token": "", "Created": "", "Target": "" }

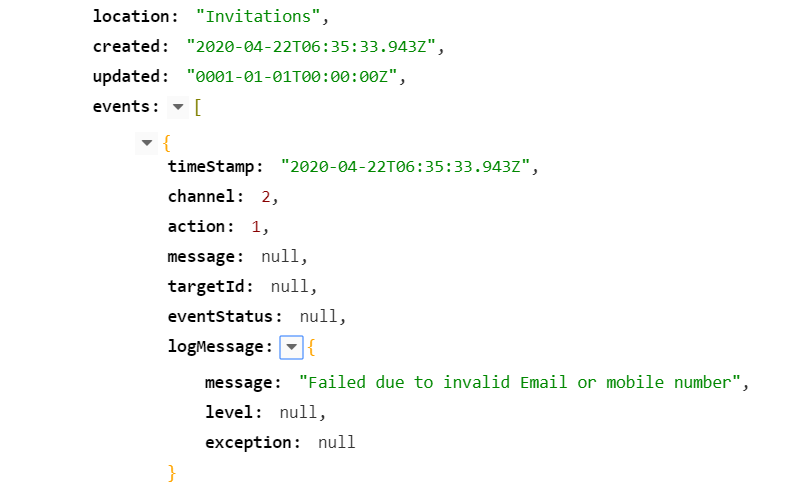

- API Response

[

{

"id": "5e9990b2ddc510a0d4d3cf2c",

"tokenId": null,

"deliveryWorkFlowId": "5e569ba530bb351664da3622",

"dispatchId": "5e56a09830bb351664da3624",

"batchId": "69afacb7-8cdb-4c1e-ada5-e01c67e27243",

"user": null,

"location": "Invitations",

"created": "2020-04-17T11:19:14.644Z",

"updated": "0001-01-01T00:00:00Z",

"events": [

{

"timeStamp": "2020-04-17T11:19:14.644Z",

"channel": 2,

"action": 1,

"message": null,

"targetId": null,

"eventStatus": null,

"logMessage": {

"message": "Failed due to invalid Email or mobile number",

"level": null,

"exception": null

}

}

],

"target": " 919953973304 ",

"targetHashed": "sha512:a285024929f2ae46c1abd6014cad64c68f1a4b4190290e8a2262665d84f8d97ea93fa3d8db438f81247e17668f629f53b73295fe0dc35ce47f9c660a9eaef4f8",

"prefills": [

{

"questionId": "5e52b55330bb2cee102b9a39",

"input": "Kapil - 1",

"input_Hash": "Kapil - 1"

},

{

"questionId": "5e52b55e30bb2cee102b9a3c",

"input": " kk04@cisco.com ",

"input_Hash": "sha512:f0a19d5ea6630aa2f75f86c1d31e5762fe8c9a432a4ec3379b235f062af0e7a8a2b6c5597839af50d8b76bea2526700629cad6eb89d0ca729ebb416c3504478c"

},

{

"questionId": "5e52b56b30bb2cee102b9a3f",

"input": " 919953973304 ",

"input_Hash": "sha512:a285024929f2ae46c1abd6014cad64c68f1a4b4190290e8a2262665d84f8d97ea93fa3d8db438f81247e17668f629f53b73295fe0dc35ce47f9c660a9eaef4f8"

},

{

"questionId": "5e52b58230bb2cee102b9a42",

"input": "123@gmail.com",

"input_Hash": "123@gmail.com"

},

{

"questionId": "5e54dbdd30bb2c6018f488ca",

"input": "Delhi",

"input_Hash": "Delhi"

}

],

"logMessage": null,

"tags": [

"UserData"

]

}

]- Error scenarios

- 401-Unautorized in case of wrong bearer token

- Empty response [] in case of wrong API Request

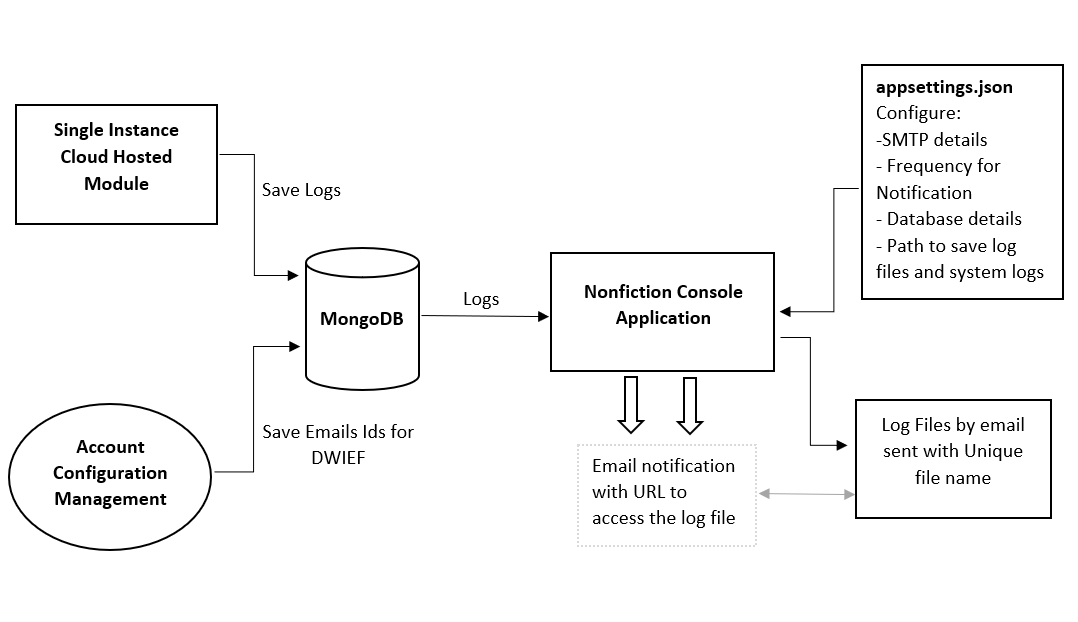

6.2 Notifications

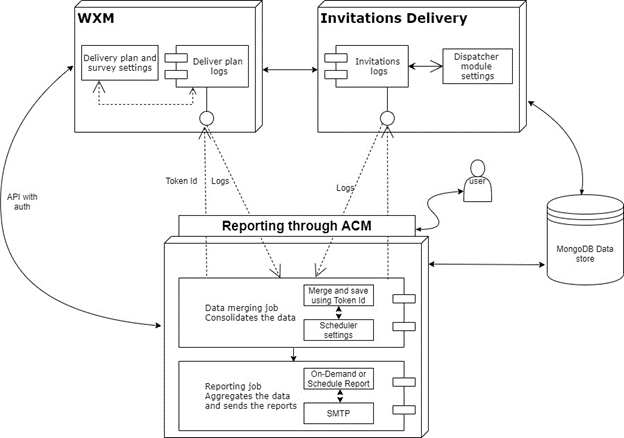

Notifications can be seen as an extension of our logging capabilities built-in. As explained under the “Logging” section, all events are categorized under the DWIEF categories. Here is an architectural diagram to provide an overview of the module.

6.2.1 Functionalities

- Notifications are sent for a consolidated set of Logs either grouped by Dispatch or at an Account level.

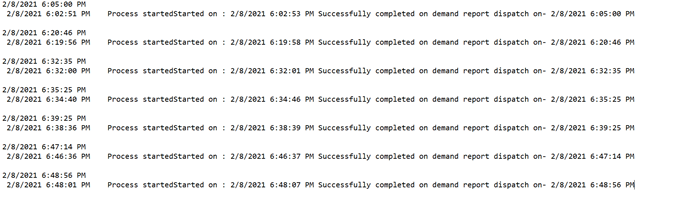

- Both Dispatch level and account level is set for near real-time (as less as 1 minute) and EOD( every UTC 00 hour)

- Error and Failures will be considered for real-time, and rest all categories of logs are notified EOD.

- Notification can be sent as Email only.

- When a real-time notification is sent, we mark it as IsNotified, to avoid duplicate logs being sent over multiple notifications.

- In an email, a maximum of 10 rows of logs will be displayed. If there are more logs available, it will be saved as a “.log” file and the link to download the file will be mentioned in the email body.

- Log file will be downloaded only post a basic authentication using a login and password.

- There will be system logs available as well, which can be used for any debug purpose of the application.

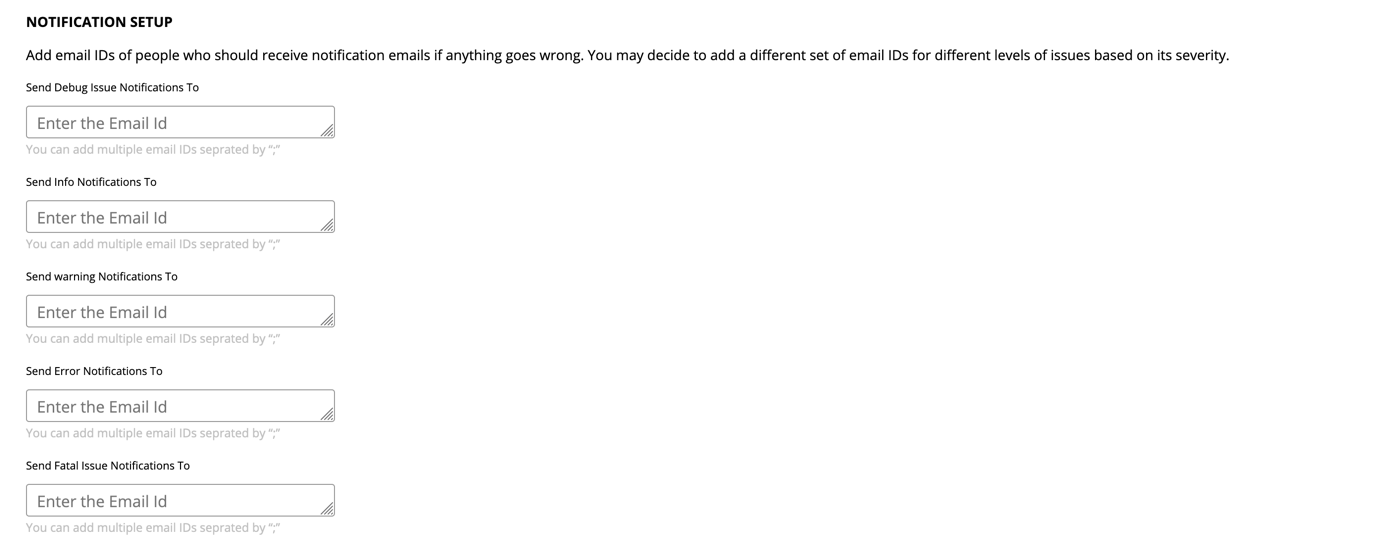

6.2.2 Subscribe to Notifications

- Dispatch Level Notifications

We allow users to subscribe for notifications for these events that are logged per Dispatch level. This can be achieved by adding an email ID of subscribers to the specific event category in the partner hosted front end.

There are 2 modes in which the Notifications are delivered.

-

Near real-time: This can be configured for how soon the user wants to get notified. This could be as frequent as every 1 minute. Error and Failure/Fatal level of logs categorized in Realtime notifications.

-

EOD: Every day, in the first hour of the Day in UTC hour 0, you will get a notification of the log levels not included in real-time. i.e Debug, Warnings, and Information.

Note: Real-time notifications can be configured to have Error and Failure/fatal both. It can also be configured to have only Failures in real-time and rest all in the EOD.

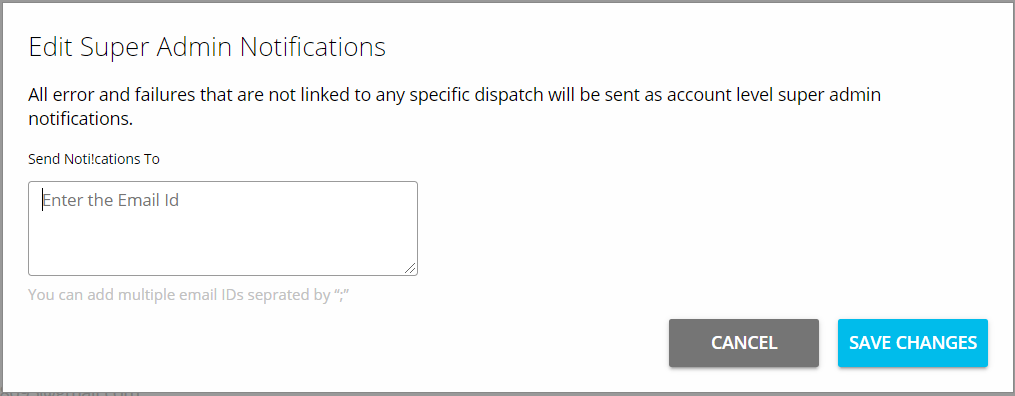

- Account Level Notifications

Few logs are not attached to any of the Dispatch but is at an account level. These can be cumulated and send in real-time or as EOD.

One set of email ids can be configured in the ACM for this category of notifications. All account level logs will be delivered to these email IDs EOD.

Ex. Fail to authenticate the bearer token or fetching data like Delivery Policy/Dispatch/Questions from WXM etc.

6.2.3 Logs processing details

- All the logs are added to the MongoDB with the collection name “EventLog”. The log levels and the messages are defined here.

- A sample EventLog is mentioned below

{

"_id" : "5e9969c4fd16580fd0c24753",

"TokenId" : "CCTRISH-721309",

"DeliveryWorkFlowId" : null,

"DispatchId" : "5e9099b7cb93e8c9e8f7119a",

"BatchId" : "a8b7442a-a85f-424f-9728-d8d7937ffa1b",

"User" : "cctrish",